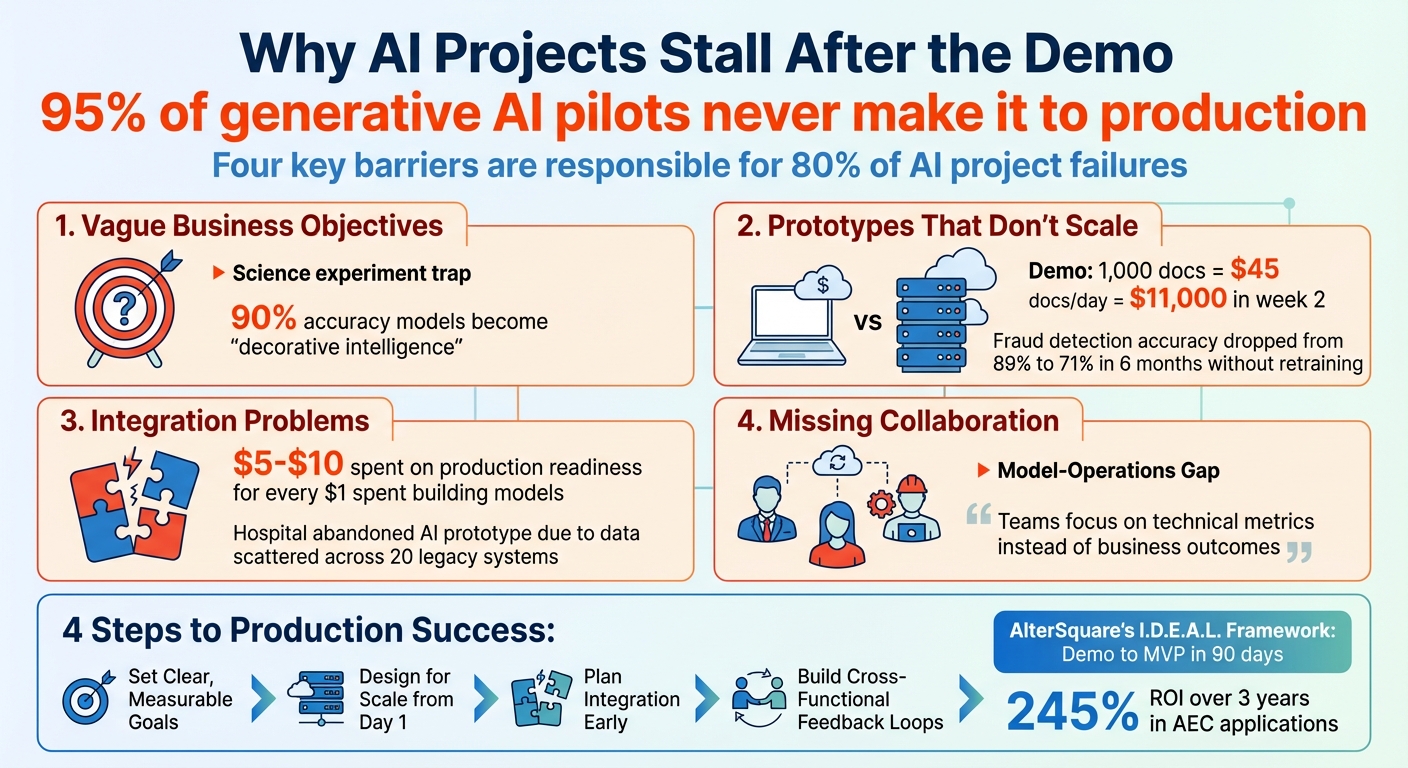

The big question: Why do so many AI projects fail after a promising demo? Here’s the reality: 95% of generative AI pilots never make it to production. That’s because demos often impress but don’t address what it takes to actually implement AI into business systems.

Key reasons AI projects stall:

- Unclear goals: Many projects start without defined business objectives, leading to irrelevant results.

- Scaling issues: Demos work on small, clean data, but production systems face messy, high-volume data that’s expensive to process.

- Integration challenges: AI often struggles to fit into legacy systems, creating bottlenecks.

- Lack of collaboration: Teams work in silos, causing misalignment between technical efforts and business needs.

The fix? Treat AI projects like any other core business investment. Focus on measurable goals, scalable designs, early system integration, and ongoing collaboration. For example, using frameworks like AlterSquare’s I.D.E.A.L. process can help deliver a functional AI system in just 90 days, ensuring scalability and cost efficiency.

Bottom line: Successful AI projects require more than flashy demos. They need clear goals, scalable systems, and teamwork to avoid being shelved.

Why 95% of AI Projects Fail After Demo: 4 Key Barriers and Solutions

Why AI Projects Fail – Stop Wasting Money and Make AI Actually Work

sbb-itb-51b9a02

Why AI Projects Stall After the Demo

Moving from a successful AI demo to full production often reveals several major challenges. In fact, four key barriers are responsible for 80% of AI project failures [3].

Vague Business Objectives

Many AI projects kick off without clearly defined goals, leading to what’s known as the "science experiment trap." These projects often showcase impressive technical feats but fail to address real business needs [2]. Teams may focus on metrics like accuracy, but neglect to ask whether the model will drive meaningful decisions.

Ari Joury, AI Researcher: "Bad data is a convenient diagnosis… it allows teams to avoid a more uncomfortable realization: the demo answered the wrong question." [8]

When objectives remain unclear, projects tend to fail not because of technical flaws, but due to a lack of trust and engagement. For example, a single unexplained result in a stakeholder meeting can erode confidence and lead to the model being abandoned [8]. Even a model with 90% accuracy can become "decorative intelligence" – producing outputs that are ultimately ignored [8].

But even when a demo aligns with business goals, scaling it for real-world use introduces new hurdles [3].

Prototypes That Don’t Scale

Demos often operate on small, curated datasets, while production environments deal with messy, high-volume data [3]. Consider a document classification project: during the pilot phase in December 2025, processing 1,000 documents cost $45. But when scaled to 50,000 documents daily in production, costs skyrocketed to $11,000 in just the second week due to expensive GPT-4 calls for edge cases [6].

This "it works on my laptop" syndrome highlights the gap between demo-ready prototypes and production-ready systems. Teams frequently overlook MLOps essentials like model versioning, CI/CD pipelines, and automated retraining. For instance, a fraud detection model launched with 89% accuracy, but within six months, its performance dropped to 71% because fraud patterns evolved and there was no retraining pipeline in place [6].

Scaling issues often lead directly to challenges with integrating AI into existing systems [6].

Integration Problems with Existing Systems

Once scalability is addressed, the next major obstacle is integrating AI solutions into legacy systems. This is often referred to as the "production chasm" [6].

Naveen C, Technical Writer, Stackademic: "The production chasm isn’t a surprise. It’s a tax teams pay for optimizing demos instead of systems." [6]

For example, a large North American hospital had to abandon an AI patient care prototype because the necessary data was scattered across 20 legacy systems, making retrieval nearly impossible [7]. Issues like mismatched data formats (e.g., the model expects JSON but receives malformed XML) or authentication problems can turn smooth demos into logistical nightmares [6]. On average, businesses spend $5 to $10 for every $1 spent on building AI models to make them production-ready and compliant [6]. These unexpected costs can derail projects when budgets are based solely on demo-stage expenses.

Missing Cross-Functional Collaboration

Finally, a lack of collaboration across teams can doom AI projects. When data scientists work in isolation from DevOps teams, business stakeholders, and end users, critical gaps emerge [3]. This "Model-Operations Gap" often leaves no one responsible for monitoring the model’s performance after deployment, and feature priorities may fail to reflect actual user needs [2].

Neil Dhar, Global Managing Partner, IBM Consulting: "Excluding your strategic stakeholders, business unit leaders and collaborators ultimately means to neglect the perspectives and resources you need to succeed." [2]

Without collaboration, teams focus on technical metrics instead of business outcomes, delaying the realization of any financial or operational benefits [6].

How to Move AI Projects from Demo to Production

Shifting an AI project from a demo to full-scale production comes with predictable hurdles. To succeed, organizations need to treat AI as essential infrastructure tied to revenue, not just an experiment [12]. While experiments can fail without major financial consequences, infrastructure must deliver measurable returns [12]. Let’s break down how to tackle these challenges step by step.

Set Clear, Measurable Business Goals

Start by focusing on outcomes rather than the technology itself [9][10]. Before diving into tools or building models, define the specific business problem you’re solving and the economic value it brings. Establish key performance indicators (KPIs) upfront – like “reducing false positives by 15%” or “increasing revenue by 12%” – to avoid relying on subjective judgments [10].

"AI isn’t the goal. Business results are. AI is just one means to get there, sometimes, not the best one." – Okoone [10]

To streamline this process, AlterSquare’s I.D.E.A.L. Framework offers a structured way to move from concept to MVP in 90 days [11]. This framework emphasizes pairing adoption metrics with business impact – like EBITDA gains or reduced churn – right from the start [5]. Assigning a business sponsor to oversee the project from approval to value measurement ensures accountability doesn’t stop at technical execution [5].

A Value-to-Complexity Workshop can also help align priorities. In this workshop, teams from finance, product, data, and legal score use cases based on their revenue potential versus deployment difficulty [12]. Once goals are clearly defined, the next step is to design a system that can handle real-world demands.

Design for Scale from Day 1

While demos often rely on clean, limited data, production systems must manage messy, high-volume inputs [9]. To prepare for this, use modular, API-driven architectures and MLOps to manage the model lifecycle [9]. Avoid over-engineering – focus on delivering value rather than chasing unattainable perfection [13].

"Designing for 100% accuracy is like designing a bridge that will withstand any event, including an asteroid strike." – Greg Diamos, Engineering Tech Lead, Landing AI [13]

Key strategies include implementing version control, reducing technical debt, and simplifying features for production. Reusing code and assets can boost development speed by 30% to 50% [4]. For instance, a customer service system processing 200,000 tickets monthly successfully optimized costs by routing 85% of cases to a smaller model (Llama 3.1 8B) and reserving GPT-4 for ambiguous cases. This approach, combined with aggressive caching, cut monthly API expenses from $8,000 to $1,200 [6].

Another tip: adopt "Policy as Code" to automate model monitoring and risk reviews, which can speed up production timelines [4]. When faced with complex or unclear cases, build protocols to involve human operators rather than striving for AI to handle 100% of scenarios – a costly and often unnecessary goal [13]. A scalable design sets the stage for smooth system integration, discussed next.

Plan for System Integration Early

With goals and scalability in place, early integration planning is essential to avoid production bottlenecks. Involve integration specialists from the beginning, rather than waiting until the demo phase is complete. Document environmental factors that could impact model performance and identify potential risks and dependencies upfront [13].

Using techniques like "Shadowing Mode" allows AI systems to operate alongside existing workflows without disrupting them [13][4]. This approach tests how the model performs under real-world conditions before full deployment. Orchestration engines can also help manage interactions between models, databases, and legacy systems.

Define non-functional requirements (NFRs) during the proof-of-concept stage. Metrics like end-to-end latency, requests per second, and security compliance should be evaluated to ensure the system meets technical demands [14]. Tools like Great Expectations or Talend can automate data quality checks, making it easier to audit infrastructure before implementation [11].

Build Cross-Functional Feedback Loops

AI projects often stumble because organizations aren’t fully prepared [5]. When data scientists operate in isolation from DevOps teams, business stakeholders, and end users, critical gaps arise. Regular reviews are essential to remove obstacles and maintain alignment [5].

For example, in early 2024, a medium-sized subsidiary of a Latin American conglomerate scaled its generative AI chatbots to handle nearly 60% of customer interactions – up from less than 3% – within six months. With direct CEO sponsorship and structured collaboration frameworks, the company improved model accuracy from 92% to 97%, reduced churn, and increased marketing campaign conversions [5].

Simple tools, like shared spreadsheets or Slack channels, can facilitate continuous feedback from domain experts and stakeholders on AI outputs [14]. A phased delivery approach, combined with ongoing input from users, ensures the project remains aligned with practical needs. Setting a 90-day execution clock can also force clarity, deliver visible results, and build trust with stakeholders, making it easier to secure further funding [12].

Examples and Tools That Work

Building on the challenges of scaling and integration, practical examples and specialized tools, like AlterSquare’s I.D.E.A.L. Framework, provide a clear roadmap for success.

AlterSquare’s I.D.E.A.L. Framework is designed to shrink a typical 12-month AI development process into just 90 days, delivering a functional MVP [11]. This method has shown particular success in fields such as Architecture, Engineering, and Construction (AEC), where the complexity of data and outdated systems often make integration tough. The framework starts with "quick-win" features – think automated document classification or defect detection – and evolves to more advanced solutions, like human-in-the-loop quality assurance.

The framework follows a structured approach: data auditing, implementing early-value features, quality assurance with human oversight, pilot testing, and gradually scaling by integrating into project management systems [11]. This streamlined process not only speeds up development but also ensures cost efficiency and scalability, making it easier to adopt smart tools.

AlterSquare offers both comprehensive development services and flexible resource support, helping startups and tech-focused founders meet tight deadlines. After launch, their services leverage cloud technologies to handle growing user demands. In AEC applications, this strategy has been linked to a 245% return on investment over three years [11].

The cost of implementing AI systems typically ranges from $20,000 to $80,000 for basic setups, while more advanced solutions can cost between $50,000 and $150,000. Despite these upfront expenses, successful AI integration often results in annual savings exceeding $100,000. Tools like Great Expectations and Talend Data Quality play a pivotal role in automating data validation, significantly cutting down on data preparation time – which can consume up to 80% of resources [11] – and delivering substantial cost savings.

"The key to success lies in data-driven validation, smart use of technology, and a steady feedback loop to improve and grow your product." – AlterSquare [11]

Conclusion

Taking an AI project from a demo to a fully operational system isn’t just about technical execution – it requires a shift in how an organization approaches the initiative. The stark difference between the 95% of AI projects that fail and the 11% that succeed often boils down to four key factors that determine whether these efforts lead to measurable results or fade into obscurity [1][4].

These hurdles highlight the importance of a well-rounded strategy. As Ari Joury from Wangari Digest explains:

"Enterprise AI is not just a technical artifact. It is a socio-technical system. It lives at the intersection of technology, incentives, accountability, and human judgment" [8].

In other words, success in AI demands more than just highly accurate models. It requires clear ownership, business-aligned KPIs, and organizational commitment right from the start. For every $1 spent on creating an AI model, companies typically need to invest $3 in change management to ensure the project delivers meaningful results [4]. Skipping this step often leads to systems that generate interesting insights but fail to drive real business decisions [8][2].

AlterSquare’s I.D.E.A.L. Framework is designed to address these challenges head-on with a focused 90-day MVP process. This approach ensures scalability, smooth integration, and continuous collaboration across teams. By embedding these principles, the framework helps bridge the gap between prototype and production, paving the way for solutions that truly scale.

If your AI project is stuck in the demo phase, AlterSquare’s engineering-as-a-service model offers the expertise and structured approach – covering everything from data auditing to cloud scaling – to transform prototypes into production-ready systems that deliver tangible value.

FAQs

What makes it difficult to scale AI projects from a demo to full production?

Scaling AI projects beyond the initial demo often stumbles due to a mix of technical hurdles and organizational roadblocks. Many demos are built without specific business objectives or measurable success metrics, which makes it tough to justify further investment. Plus, the data used in these demos often falls short in terms of quality, governance, and consistency – key factors for reliable, ongoing operations.

On the technical side, prototypes frequently aren’t built with scalability in mind. Common issues include hard-coded assumptions, lack of modularity, and minimal performance testing. These shortcomings make it challenging to handle real-world demands, integrate with existing systems, or meet critical security and compliance requirements. On the organizational front, siloed teams and ambiguous ownership create barriers to collaboration. Add to that a shortage of AI expertise and structured workflows, and projects often remain stuck in the experimental phase.

To transition from demo to production, organizations need to focus on aligning AI initiatives with clear business goals, implementing a strong data strategy, designing systems that can scale, and encouraging cross-functional teamwork. These steps are essential for bridging the gap and delivering AI solutions that are ready for real-world use.

How can organizations successfully integrate AI with their existing systems?

To successfully integrate AI with legacy systems, begin by evaluating your organization’s readiness in three key areas: people, processes, and technology. Having strong executive support is essential – not just for securing funding but also for ensuring the project delivers measurable outcomes that align with business goals. You’ll also need a solid data governance framework and a skilled team that includes data engineers, machine learning experts, and domain specialists. This helps avoid common challenges like isolated pilot projects or assumptions that haven’t been properly validated.

Approach the AI solution as a service that can gradually connect with your existing systems. Use tools like APIs, microservices, or container-based deployments to enable this integration. Start small with a phased strategy: sandbox testing, move on to a pilot program, and then scale up to full deployment. This step-by-step process allows for thorough testing, monitoring, and scalability. To keep the AI aligned with your business objectives and reduce technical debt, set up continuous feedback loops. Use performance dashboards and automated updates to adapt the system to changing needs over time.

How can teams collaborate more effectively on AI projects?

Effective collaboration in AI projects starts by framing them as shared business challenges, not just isolated technical tasks. To set the stage for success, bring together product owners, engineers, data scientists, and business teams early in the planning phase. This ensures everyone is aligned on clear, measurable objectives and understands their specific role in reaching those goals.

Using agile workflows – such as regular check-ins, shared backlog reviews, and short sprints – helps teams spot and resolve potential issues quickly. Collaborative tools like shared notebooks and centralized data repositories play a crucial role in breaking down silos, making it easier for all stakeholders to access and contribute to the project without barriers.

Equally important is creating feedback loops by involving end-users during pilot deployments. Gathering insights from real-world usage allows teams to iterate and refine the model based on actual needs. This process not only improves the solution but also builds trust, encourages accountability, and keeps the project moving forward well beyond the initial demo phase.

Leave a Reply