Why Adding AI Often Increases Product Complexity Instead of Reducing It

Taher Pardawala January 14, 2026

When AI is added to products, it often promises faster development and simpler workflows. However, the reality is more complicated. Many companies face challenges like technical debt, unpredictable outputs, and skill gaps, which can make products harder to manage. For example:

- Technical Debt: AI-generated code can be difficult to understand and risky to change, especially when rushed.

- Unpredictable Outputs: AI systems can fail silently or produce inconsistent results, disrupting workflows.

- Skill Gaps: Many teams lack the expertise needed to integrate AI effectively, leading to errors and inefficiencies.

Real-world examples show the risks of poor AI implementation. Zillow lost over $500 million due to flawed AI models, and IBM’s Watson for Oncology struggled with adoption despite a $5 billion investment. These failures highlight the importance of proper planning and testing.

To avoid these pitfalls, focus on small, controlled AI trials, prioritize human oversight, and align AI features with core product goals. Tracking metrics like code complexity and rejection rates can also help manage risks. By taking a measured approach, teams can use AI effectively without overcomplicating their products.

Why You’ll NEVER Scale Your App With AI

Why AI Adds Complexity: Common Pitfalls

AI holds the promise of simplifying product development, but rushing its integration can create unexpected challenges. When teams dive into AI without proper preparation, they often face three main issues: technical debt that grows over time, workflow disruptions caused by unpredictable outputs, and a lack of expertise. Let’s break each one down and explore how they affect development.

Technical Debt from Rushed AI Implementation

AI can generate code much faster than developers can thoroughly review or understand it [5]. This speed mismatch often leads to what researchers call epistemic debt – a state where teams deploy code they don’t fully grasp.

"Technical debt makes code hard to change. Epistemic debt makes code dangerous to change." – Stanislav Komarovsky, Software Engineering Researcher [5]

The issue is worsened by a practice known as vibe coding, where developers iterate on AI prompts until the output "seems" right without fully examining or understanding the generated code [2][8]. This approach skips intentional design, flooding the codebase with unverified and potentially faulty code, making future modifications risky and time-consuming.

But technical debt isn’t the only obstacle – AI’s unpredictable outputs can throw workflows into chaos.

Workflow Disruptions from Inconsistent AI Outputs

Unlike traditional software that delivers consistent results, generative AI is inherently unpredictable. Even with identical inputs, it can produce varied outputs, which makes it difficult to integrate into workflows that require stability. Compounding the issue, AI models often fail silently, offering guesses for unfamiliar data without signaling uncertainty. This can result in undetected errors creeping into production systems.

Such inconsistencies lead to validation bottlenecks. Development teams are forced to rely on time-intensive human reviews, which slow down delivery. Even small changes can trigger cascading regressions, requiring full-scale retesting. In extreme cases, high false-positive rates can cause alert fatigue, where operators start ignoring the AI system altogether just to maintain workflow efficiency.

These workflow challenges, combined with a lack of expertise, make seamless integration even harder.

Expertise Gaps in AI Integration

Effectively managing AI tools requires specialized knowledge that many teams simply don’t have. In fact, 38% of organizations using AI for coding report lacking confidence in the results [7]. To make matters worse, the success rate of AI in generating functional code for complex problems has plummeted – from 40% in 2021 to just 0.66% in 2024 [7].

"The benefit of using gen AI in engineering is that it simplifies something that is highly complex. The challenge… is also that it simplifies something that is highly complex." – Deloitte Specialist [7]

While experienced developers can use AI as a force multiplier, critically evaluating its outputs, less experienced team members often rely too heavily on AI. This reliance can lead to missed structural flaws and unstable implementations [6]. AI tools also struggle with context blindness, failing to consider the broader scope of large projects. This can result in duplicated code, architectural inconsistencies, and bypasses of system-wide standards [5][6].

Without expertise in areas like model tuning, maintaining data pipelines, and integrating AI into existing tech stacks, teams often find it challenging to keep their AI projects stable and manageable.

Examples of AI Adding Complexity

When integrating AI into workflows, rushing the process can lead to unexpected complications, as these real-world examples demonstrate.

In January 2026, the nonprofit METR conducted a trial with AI tools like Cursor Pro and Claude 3.5/3.7 Sonnet. They enlisted 16 developers to complete 246 tasks, expecting a 24% reduction in task completion time. Instead, the tasks took 19% longer. The reason? Developers had to spend additional time cleaning up code and fixing errors caused by AI-generated outputs that lacked proper context [2].

Zillow faced a major setback when its AI-powered home valuation models, used for its home-flipping business, struggled with "concept drift" – failing to adapt to market volatility. This oversight led to losses exceeding $500 million and forced the company to shut down the program entirely [9].

In February 2024, Air Canada‘s AI chatbot gave incorrect information to passenger Jake Moffatt about bereavement fares. This error escalated into legal action, holding the airline accountable for the misinformation. What started as an operational hiccup turned into a legal liability [9].

Another example is IBM’s Watson for Oncology, which cost the company over $5 billion between 2018 and 2021. Despite the hefty investment, the tool struggled with limited clinical validation and adoption, illustrating the challenges of applying AI in highly specialized fields [9].

These cases emphasize how poorly planned AI integration can create unforeseen challenges, from operational inefficiencies to financial losses and legal risks. They underscore the importance of thorough planning and risk assessment before deploying AI systems.

How to Identify and Avoid AI Pitfalls Early

Addressing potential AI pitfalls requires early detection and strategic action. Often, the signs of AI-related issues emerge well before they escalate into critical problems. Teams that identify these signals early can adjust course and prevent larger failures.

Audit AI-Generated Outputs for Unnecessary Complexity

Frequent prompt iterations can unintentionally lead to overengineering. When outputs aren’t carefully reviewed, simple tasks can become buried under layers of abstraction and overly intricate interfaces [5].

One clear warning sign is when unit tests become challenging to add or update. This often points to a design that’s too rigid or overly intertwined [5]. As previously mentioned, technical debt makes code difficult to modify, while epistemic debt makes changes risky.

A notable example occurred in December 2025, when researcher Stanislav Komarovsky identified a "Package Hallucination Attack." In this case, an AI assistant recommended a typosquatted package (colourama instead of the legitimate colorama). The malicious package exfiltrated environment variables, even though the code passed functional tests for terminal coloring. The breach went undetected for a significant period [5].

To counter such risks, implement "evidence-gated merges" for high-impact features. This involves requiring clear evidence of understanding – such as independent tests, architecture reviews, or detailed documentation – before merging AI-generated code [5].

Test AI Features in Phases

Rolling out AI features across an entire product all at once can be risky. A phased approach allows teams to identify and address issues while they’re still manageable. Start with "discovery" tasks – activities like research, brainstorming, or drafting content where errors have minimal consequences. Once reliability is confirmed, move on to "trust" tasks that are critical to the product’s functionality [12].

AI error rates can escalate quickly. For example, an AI model with 80% reliability will fail 70% of the time after five steps in a multi-step workflow [12]. Similarly, AI agents succeed in only 58% of single-turn tasks, with success rates dropping to 35% for multi-turn interactions [12].

To test AI functionality safely, use innovation sandboxes. For instance, a major oil and gas company reduced the time to set up dedicated generative AI sandboxes from over six weeks to less than a day by creating a centralized platform with automated governance controls [3].

Incorporate real users and prompt engineers into the design stage, rather than waiting for traditional user acceptance testing. This upstream, human-centered approach ensures AI outputs meet user needs early in the process [7]. Phased trials provide a structured and controlled way to integrate AI without adding unnecessary complexity.

Track Metrics to Monitor AI Impact

Effective management starts with measurement. Beyond tracking speed, it’s essential to monitor how AI affects maintainability and clarity.

- Cyclomatic complexity: This metric tracks the number of independent paths in your code. Rising scores can indicate that AI-generated code is becoming too difficult to manage safely [10].

- Acceptance/rejection rates: Monitoring how often human reviewers approve AI-generated code can reveal changes in output quality. A declining acceptance rate may signal a drop in reliability [7].

- System Trust: This measures the likelihood that your team can explain and safely modify the system, reflecting epistemic debt. If System Trust falls below acceptable levels, pause new feature development until thorough human verification is completed [5].

Additionally, keep an eye on API leakage and the volume of discarded or uncommitted code. High discard rates often point to misaligned AI outputs [7]. Currently, about 30% to 50% of teams’ innovation time with generative AI is spent on compliance adjustments or rework, which could indicate deeper integration issues [3].

Finally, set up a centralized AI gateway to log all prompts and responses. This creates an audit trail that can highlight issues like hallucinations, data policy violations, or consistently poor prompt outcomes [3].

sbb-itb-51b9a02

AlterSquare‘s Approach: Simplifying AI Integration

AlterSquare takes the complexity out of AI integration by addressing potential challenges head-on. Instead of rushing to implement AI features, they follow a structured, phased approach to test ideas early. This strategy helps them avoid unnecessary technical debt and ensures workflows remain uninterrupted.

At the heart of this methodology is their proprietary I.D.E.A.L. Framework, which provides a step-by-step guide to adopting AI in a controlled and efficient manner.

The I.D.E.A.L. Framework for Controlled AI Adoption

The I.D.E.A.L. Framework breaks AI integration into five stages: discovery, design, validation, agile sprints, and post-launch support. This streamlined process transforms what might traditionally take 12 months into a 90-day MVP cycle, all while maintaining a scalable foundation [13].

- Discovery Phase: Teams identify which tasks are best suited for AI solutions.

- Validation Phase: Human-in-the-loop (HITL) workflows ensure accuracy by involving humans in data labeling, training, and output verification. This minimizes workflow disruptions and improves the reliability of AI outputs.

As one Deloitte expert aptly put it:

"The benefit of using gen AI in engineering is that it simplifies something that is highly complex. The challenge of using gen AI in engineering is also that it simplifies something that is highly complex" [7].

To avoid technical debt, AlterSquare employs evidence-based merges, relying on rigorous testing, thorough reviews, and proper documentation to validate AI outputs.

Bridging Expertise Gaps with Team Augmentation

While the I.D.E.A.L. Framework provides the roadmap, having the right expertise ensures smooth execution. AlterSquare enhances teams with specialists skilled in AI model tuning, data pipeline management, and seamless integration into existing systems. This approach addresses a major industry challenge: 38% of organizations struggle with confidence in their AI coding, and only 27% achieve their expected outcomes [7][14].

Rather than leaving critical tasks to less experienced developers – who might inadvertently create technical debt – AlterSquare’s experts focus on proper documentation and system updates from the outset, ensuring a solid foundation for long-term success.

Real-World Success with AI-Driven MVPs

AlterSquare’s methodical approach to AI has delivered impressive results in real-world projects:

- In July 2025, an eight-mile highway project used AI to optimize schedules, cutting delays and saving over $25 million [13].

- A high-rise construction project in Bangkok reduced its timeline by 208 days with AI-driven tools, significantly lowering carrying costs [13].

- A North American data center project avoided $32 million in potential revenue losses by identifying bottlenecks before they caused delays [13].

These successes stem from focusing on specific challenges – like document sorting, defect detection, or compliance checks – where AI can provide immediate benefits without adding unnecessary complexity [15]. Generative AI tools also speed up development while ensuring the architecture remains scalable and easy to maintain.

By setting clear guidelines for AI-generated code and prioritizing technical debt management, AlterSquare avoids the common pitfall where 30% to 50% of innovation time with generative AI is wasted on compliance fixes or rework [3]. This disciplined approach ensures that speed does not come at the cost of future maintenance headaches.

Through these measures, AlterSquare demonstrates how AI can be adopted responsibly, delivering fast, meaningful results without overcomplicating systems.

Steps to Reduce Complexity with AI

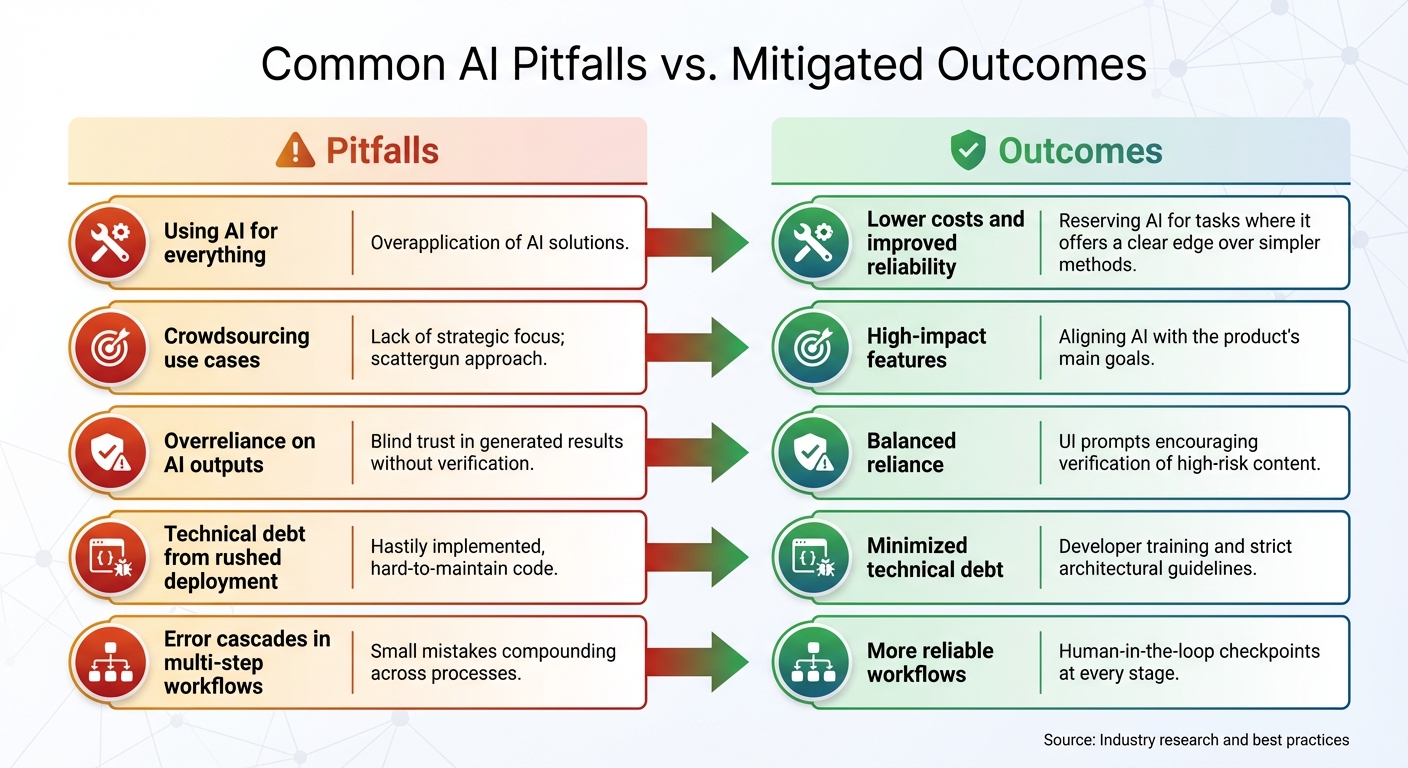

Common AI Pitfalls vs Mitigated Outcomes in Product Development

Integrating AI effectively requires a clear, structured approach that prioritizes solving core problems over chasing trends. A common pitfall for teams is falling into the "AI-first" trap – choosing AI simply because it’s popular, rather than because it’s the best solution for the task. In many cases, simpler methods are more effective [16]. The best starting point is always the problem itself, not the technology. By focusing on the problem, you can ensure that AI capabilities align with your MVP’s objectives and avoid unnecessary complications.

Align AI Features with Core MVP Goals

To steer clear of the technical debt mentioned earlier, every AI feature must directly support your product’s main objectives. Before implementing a feature, ask yourself: Does this directly contribute to the core business goals? Avoid features with minimal impact that don’t serve these goals [16]. Instead, take a big-picture approach to identify features that deliver the highest ROI. Consulting your tech stack can help determine which AI capabilities genuinely add value and which ones merely create complexity.

Using a centralized platform for validated services – like ethical prompt analysis and access controls – can significantly cut down on unnecessary compliance efforts [3]. This strategy can speed up AI approval processes by up to 90% [3], allowing teams to concentrate on impactful features.

Once your AI aligns with your MVP, ensuring high-quality results through human-AI collaboration becomes the next priority.

Implement Integrated Human-AI Reviews

Even the most advanced AI systems need human oversight. A great example is Amazon’s CodeGuru, an AI-driven tool for code reviews. It flagged a performance issue related to excessive CPU usage, and after applying its recommended fix, Amazon saved 325,000 CPU hours annually [11]. However, this success wasn’t due to blind reliance on AI – it came from combining AI insights with human validation.

AI agents perform well in single-turn tasks, achieving about 58% success, but their performance drops to 35% in multi-turn interactions [12]. To prevent errors from snowballing, use cognitive forcing functions – elements like confirmation dialogues or AI-generated critiques that encourage users to pause and verify outputs before accepting them [4].

As noted in the Microsoft AI Playbook:

"Overreliance on AI happens when users accept incorrect or incomplete AI outputs, typically because AI system design makes it difficult to spot errors" [4].

Design your review processes to distinguish between "discovery" tasks (creative work where errors are less critical) and "trust" tasks (like financial or regulatory work, where mistakes can have serious consequences). For trust tasks, use deterministic processes with strict human checkpoints at every stage to prevent errors from escalating [12].

Refine AI Features with Post-Launch Support

Ongoing support after launch is essential to managing complexity. Human-in-the-loop reviews should continue for two to three years post-launch to ensure accountability and maintain quality. This approach is particularly important for refactoring AI-generated code, which often lacks proper documentation and can exacerbate legacy system issues.

A centralized AI gateway can log all prompts and responses, enabling real-time analysis to catch hallucinations, ethical biases, or data policy violations. By tracking new metrics like the "rejection rate" of AI-generated suggestions by human reviewers, you can pinpoint where the AI is falling short and make necessary adjustments.

Currently, teams spend about 30% to 50% of their innovation time with generative AI on meeting compliance requirements or waiting for them to be clarified [3]. Automated guardrails, such as microservices that review code for responsible AI practices or detect PII leaks, can streamline this process. These iterative refinements reduce complexity over time, preventing it from piling up.

| Common AI Pitfall | Mitigated Outcome |

|---|---|

| Using AI for everything | Lower costs and improved reliability by reserving AI for tasks where it offers a clear edge over simpler methods [16] |

| Crowdsourcing use cases | High-impact features that align with the product’s main goals [16] |

| Overreliance on AI outputs | Balanced reliance through UI prompts that encourage verification of high-risk content [4] |

| Technical debt from rushed deployment | Minimized technical debt through developer training and strict architectural guidelines [1] |

| Error cascades in multi-step workflows | More reliable workflows using human-in-the-loop checkpoints at every stage [12] |

Conclusion: Responsible AI Adoption for Simpler Products

Bringing AI into your product doesn’t have to make things more complicated – if it’s approached thoughtfully. The key is focusing on solving actual problems rather than chasing trends. As Huyen Chip puts it:

"We solve the problem and We use generative AI are two very different headlines, and unfortunately, so many people would rather have the latter" [16].

The right way forward starts with the problem, not the tech. Before diving into complex AI features, confirm that simpler solutions can achieve your MVP goals. And don’t skip human oversight – especially for critical workflows where mistakes can have serious consequences. This kind of groundwork builds a solid foundation for a smooth, scalable post-launch phase. Remember, getting to demo-ready quality is just the beginning. The real test comes later, with edge cases, hallucinations, and the creeping complexity that often shows up after launch.

A structured plan can help manage these hurdles. AlterSquare’s I.D.E.A.L. Framework is an example of how to do this effectively. It sets up processes to avoid automation bias, reduce technical debt, and ensure AI features stay aligned with the product’s core objectives. By using centralized governance, automated safeguards, and human-in-the-loop validation, teams can cut 30% to 50% of the time often wasted on compliance and rework [3]. This kind of system can also speed up approval processes by as much as 90% while keeping quality and scalability intact [3].

Industry trends back this up. For instance, over 25% of Google’s new code is now AI-generated [17]. However, success comes from treating AI as a tool to enhance human expertise, not replace it. When paired with skilled engineering teams, AI can deliver the 55% productivity boost it promises [1] – without compromising code quality or long-term scalability.

With the right frameworks and expert guidance, AI becomes an accelerator rather than a risk, helping teams build products that stay simple, maintainable, and aligned with user needs, even as technology continues to evolve.

FAQs

How can companies manage technical debt effectively when incorporating AI into their products?

Managing technical debt while integrating AI begins with keeping AI components in your overall debt management strategy. This means logging details like model versions, data pipelines, and AI-generated code right alongside your traditional codebase. By mapping out dependencies and prioritizing issues such as security and performance, teams can tackle critical AI-related debt before it spirals into larger system problems.

To cut down on hidden complexity, it’s important to follow disciplined development practices. For instance, adopt test-driven development for AI-generated code, require peer reviews that focus on explainability, and leverage automated tools to flag overly complex patterns. Incorporating regular refactoring cycles – sometimes called "AI debt sprints" – can also help replace fragile, AI-generated code with more maintainable solutions.

Lastly, invest in training and monitoring tools to prepare your team for AI-specific hurdles. Train developers on managing model lifecycles and handling data assets effectively, and set up dashboards to track issues like performance drift or unexpected resource usage. Taking these proactive steps ensures AI remains a benefit to your organization rather than a source of mounting complexity.

How can I ensure AI outputs are consistent and reliable?

To get consistent and dependable results from AI, it’s crucial to start by setting clear success criteria and measurable goals, such as accuracy rates or response times. Use high-quality datasets to regularly test the system, helping to identify and address potential problems early on.

Protecting data integrity is another key step. This can be achieved by using automated quality checks, applying version control to datasets, and conducting thorough labeling reviews. These practices help minimize errors and reduce the risk of data drift. At the same time, implementing strong model governance – like tracking changes to the model and enforcing rigorous review processes – can prevent hidden bugs from creeping in.

Adding human oversight is equally important. Incorporate review steps and use explainability tools to evaluate and refine AI outputs before rolling them out. Lastly, establish clear usage policies and safety protocols to ensure the AI stays aligned with your objectives and avoids unintended or harmful actions. Together, these measures create a well-structured, reliable AI system tailored to meet your goals.

How can teams address skill gaps to implement AI solutions effectively?

Addressing skill gaps begins with crafting a thoughtful talent strategy. Many organizations encounter delays due to a lack of AI expertise, but establishing a center of excellence can make a big difference. This means bringing AI specialists together, outlining clear career progression paths, and offering targeted training for engineers, product managers, and domain experts. Pairing less experienced team members with seasoned mentors – whether they’re in-house experts or external consultants – can speed up learning and reduce dependency on quick fixes.

Embedding AI expertise directly into product teams is another smart move. Instead of isolating AI specialists, cross-functional teams that include data scientists, software engineers, and subject-matter experts can work together seamlessly. They can co-develop data pipelines, validate assumptions, and iterate faster. This kind of integration eliminates knowledge silos and ensures smoother collaboration.

Finally, prioritize continuous learning and reusable resources. Keep documentation of best practices, maintain a well-organized library of AI models, and host regular workshops to keep teams informed and efficient. These efforts not only simplify workflows but also prevent unnecessary complexity, laying a solid foundation for scaling AI initiatives without creating technical debt.

Leave a Reply